PolitiFact’s Own Truth Rating? Biased

By MATT SHAPIRO

When it comes to fact-checking, no organization is better known than PolitiFact. The online fact-checking project operated by the Tampa Bay Times is synonymous with the concept of the fact-check as a genre of journalism and even won a Pulitzer for its coverage of the 2008 presidential campaign.

But despite these accolades, there are frequent complaints about PolitiFact’s bias. Unfortunately arguing that PolitiFact harbors a systemic bias is a fool’s errand because the argument inevitably goes like this:

Skeptic: PolitiFact is biased. They call true things “Mostly False” or “Half True.”

Believer: Give me an example.

S: Here you go.

B: But there was a certain way where, if you look at it from a particular angle, that claim isn’t totally true.

S: But the substantive fact was accurate.

B: But there are extenuating facts.

S: When a Democrat said the exact same thing, they called it “Mostly True.”

B: Yeah, but those statements aren’t the exact same thing, they are slightly different.

S: What? How?

B: Because the wording is slightly different.

S: Are you on drugs?

B: It’s just one example. Give me another one.

(Editor’s Note: 4 days after this blog post was published, PolitiFact updated their fact check so that the two identical statements receive the same truth rating. Despite me complaining to them several times about this example, they didn’t do anything about it until this blog post blew up.)

Feel free to play this conversation on a loop because that is exactly what ends up happening. The next example is met with the same deference to PolitiFact, citing their honesty as a baseline principle rather than a quality that needs be verified. Defenders of PolitiFact make excuses for any given article and, in the light of clear bias, insist that a single article doesn’t prove the existence of a trend.

PolitiFact itself encourages people to unquestioningly accept their truth ratings through their marketing strategy. When judging a given politician PolitiFact aggregates its ratings in a way that encourages people to look not at the truth value within any individual article with a speaker’s claim, but at the “larger” picture of the speaker’s commitment to the truth.

The assumption is that this or that article might have a problem, but you have to look at the “big picture” of dozens of fact-checks which inevitably means glossing over the fact that biased details do not add up to an unbiased whole.

Whenever you see the “Republicans Lie More Than Democrats” story, whether it is in The Atlantic, the New York Times, Politico or Salon, you’ll find that the data to support this claim comes from PolitiFact’s aggregate truth metrics.

Using collective data instead of individual cases lets PolitiFact gloss over individual articles that delivered a questionable rating; comparable situations for two speakers that PolitiFact treated differently; biased selection of facts; and instances in which PolitiFact made an editorial decision to check one speaker over another.

We could list instance after instance in which we might feel PolitiFact was being unfair, but all those details are washed away when we look at the aggregate totals. And those totals certainly seem to run heavily against politicians with an “R” next to their name.

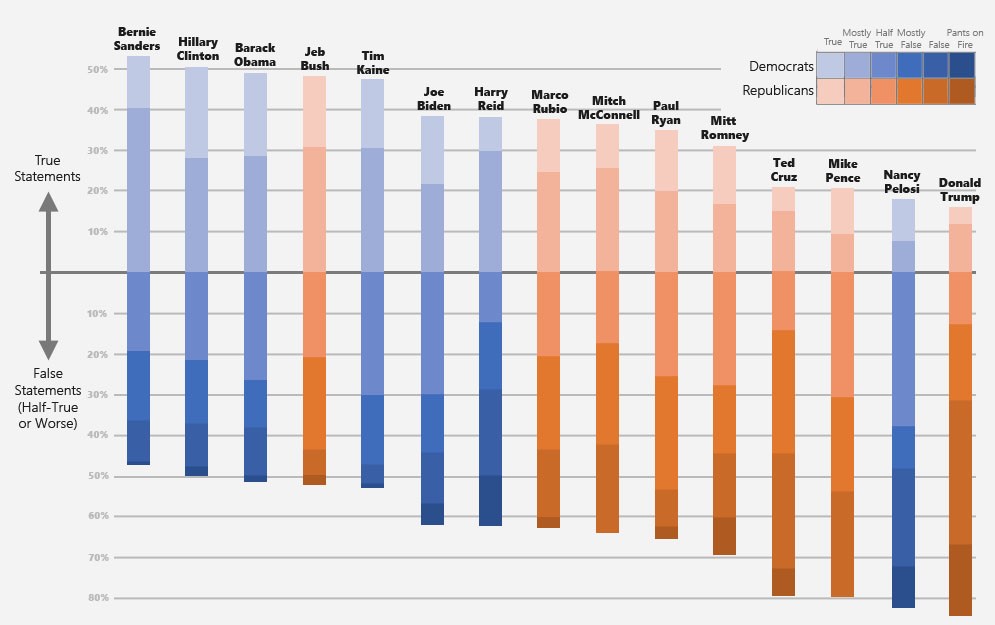

In the chart below, we’ve ranked politicians according to their truthfulness as ranked by PolitiFact. Everything above the line is ranked as “true” or “mostly true.” And the individuals are ranked by their PolitiFact truth ratings from left to right, making Bernie Sanders the most reliably truthful and Donald Trump the least.

A keen observer may notice that there is a pretty clean differentiation. Democrats dominating the “more truthful” side and Republicans dominating the “liars” corner.

Of course the casual explanation is that Republicans lie more often than Democrats. And, absent a detailed analysis and reclassification of thousands of articles, there is no good way to disprove this.

Unless, of course, you decide to scrape PolitiFact’s website for these thousands of articles and run your own analytics on that data. If someone were to do this, what sort of patterns would visible beyond the simple truth value totals?

I’m so very glad you asked, because the answer is a lot of fun.

What we found was far too much to cover in a single article. Here we’ll look briefly at “truth averages” and see how PolitiFact ranks individuals and groups, followed up by an analysis of hoe exactly they differentiate between Republicans and Democrats. In the next piece, we’ll examine how they choose facts & look specifically at how PolitiFact fact-checked the 2016 race.

The Truth, On Average

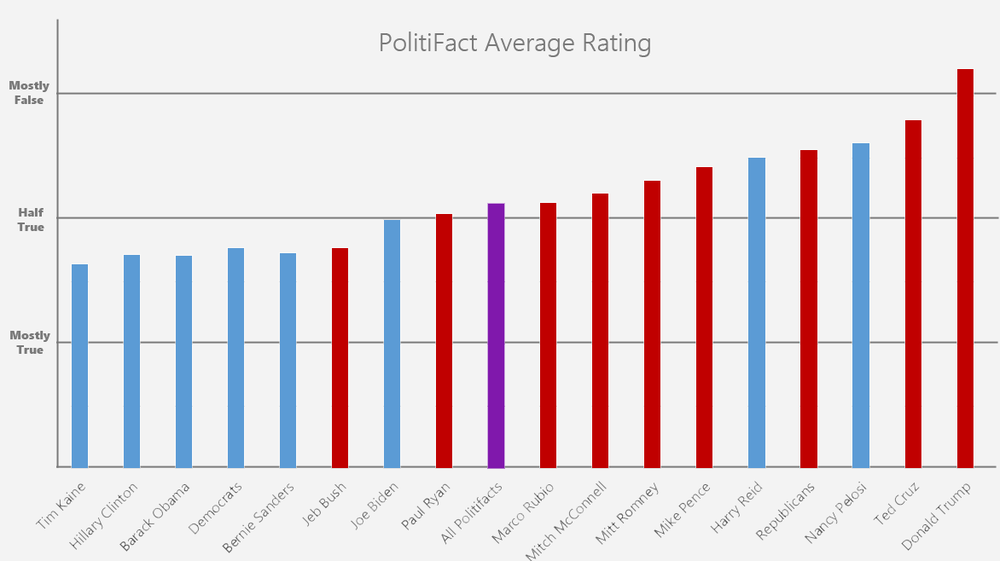

We decided to start by ranking truth values to see how PolitiFact rates different individuals and aggregate groups on a truth scale. PolitiFact has 6 ratings: True, Mostly True, Half-True, Mostly False, False, and Pants on Fire. Giving each of these a value from 0 to 5, we can find an “average ruling” for each person and for groups of people.

When fact checked by PolitiFact, Democrats had an average rating of 1.8, which is between “Mostly True” and “Half True.” The average Republican rating was 2.6, which is between “Half-True” and “Mostly False.” We also checked Republicans without president-elect Donald Trump in the mix and that 0.8 truth gap narrowed to 0.5.

This election season was particularly curious because PolitiFact rated Hillary Clinton and Tim Kaine as two of the most honest people among the 20 politicians we included in our data scrape (1.8 and 1.6, respectively) while Trump was rated as the most dishonest (3.2).

Using averages alone, we already start to see some interesting patterns in the data. PolitiFact is much more likely to rate Republicans as their worst of the worst “Pants on Fire” rating, usually only reserved for when they feel a candidate is not only wrong, but lying in an aggressive and malicious way.

All by himself, Trump has almost half of all the “Pants on Fire” ratings from the articles we scraped.

Even outside of Trump, PolitiFact seems to assign this rating in a particularly uneven way. During the 2012 election season, PolitiFact assigned Mitt Romney 19 “Pants on Fire” ratings. For comparison, for every single Democrats combined from 2007-2016 the “Pants on Fire” rating was only assigned 25 times.

This seems to indicate that Mitt Romney wasn’t just a liar, but an insane raving liar, spewing malicious deceit at every possible opportunity. In the mere 2 years he was in the spotlight as a Republican presidential nominee Romney somehow managed to rival the falsehoods told by the entire party of Democrats over the course of a decade.

Or it is also possible that PolitiFact has a bit a slant in their coverage.

Mitt Romney did not strike me as a particularly egregious liar, nor has Hillary Clinton struck me as the single most honest politician to run for president in the last 10 years.

Take a moment, a deep breath, and ponder this: If we decide we’re OK using PolitiFact’s aggregate fact-checking to declare Republicans to be most dishonest than Democrats, we have also committed ourselves to a metric in which Hillary Clinton is more honest than Barack Obama.

That doesn’t pass the sniff test, no matter which group you call your ideological home.

But a sniff test isn’t data, so let’s dig deeper.

When You’re Explaining, You’re Losing

We started by looking at the lopsided way in which PolitiFact grants ratings. Now it’s time to look at the nature of the articles PolitiFact writes.

The most interesting metric we found when examining PolitiFact articles was word count. We found that word count was indicative of how much explaining a given article has to do in order to justify its rating.

For example, if a statement is plainly true or clearly false, PolitiFact will simply provide the statement and analyze it according to a widely respected data source. It takes under 100 words to verify Mitt Romney’s statement on out-of-wedlock births or Hillary Clinton’s statement on CEO pay

By contrast, when you look at their articles on Mitt Romney’s statement on Obama apologizing for the US (a statement that they fact-checked no fewer than five times), PolitiFact did an in-depth analysis of what exactly it means to “apologize” for something. They looked at Romney’s statement, asked his team for a response, parsed the response, got other “expert” opinions, combed through Obama’s statements. The first 3 times they fact-checked this, PolitiFact had to use 3,000-plus words per article to explain that Romney was wrong. Each time, their rating relied on what exactly it means to “apologize”. Then end up writing pieces in which they sound a lot like a parent scolding a small child about how they have to “really mean” their apology or it doesn’t count.

We discovered that what takes the most words is when they need to explain their reasoning about why you might think something is true (or false), but you are wrong and it is, as a matter of fact, false (or true).

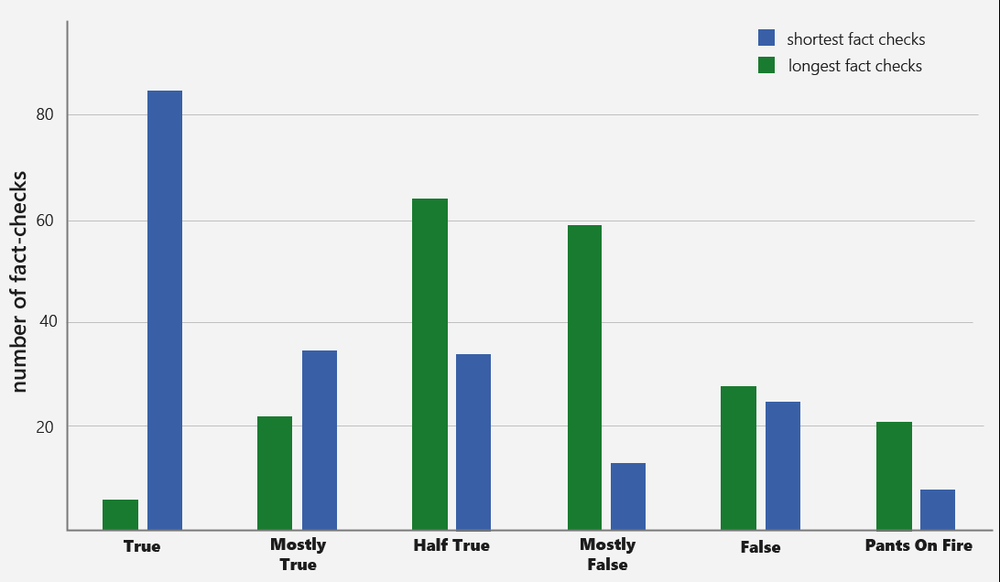

We found this pretty consistently. If you look at the shortest 10% of all fact-checks, two thirds of them are rated as “True” or “Mostly True”.

Conversely, the longest 10% of fact checks, two thirds of those are rated “Half True” or “Mostly False”, ratings we typically found to be fairly subjective.

They say that “when you’re explaining, you’re losing” and we can see this in effect here. It is vital for the PolitiFact brand that they boil down all explanations into a metric that simply says “True” or “Mostly False.” When they need to explain their rating, they are frequently in the process of convincing us of a very subjective call. The more plainly subjective a fact-check is, the more likely it isn’t so much of a fact-check as it is just another opinion.

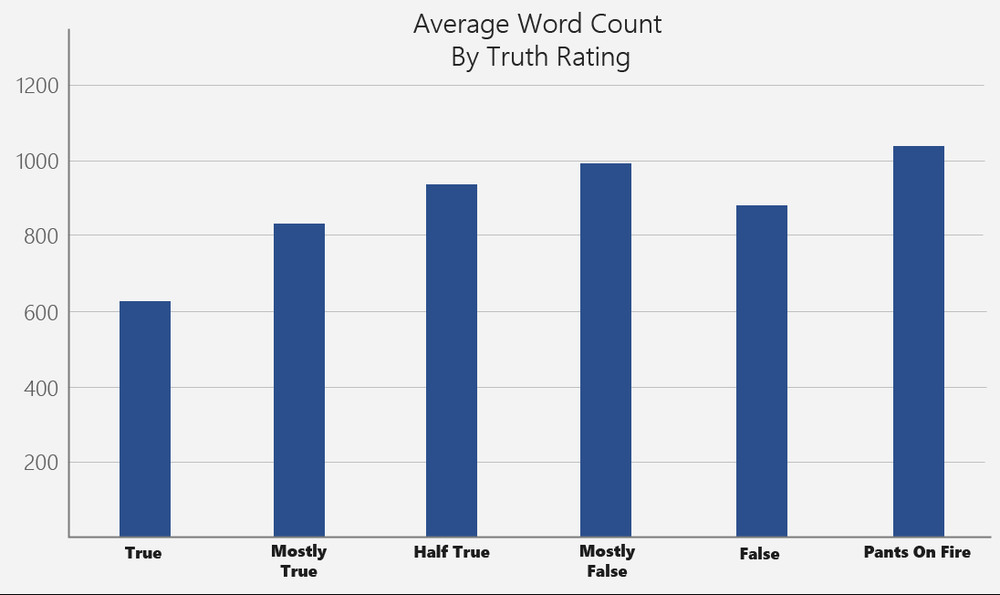

Of the 2,000+ articles we scanned, we found an average of 871 words per article. These were not distributed evenly; True and Mostly True articles had the lowest word counts, while Pants on Fire showed the highest.

The simplest fact-checks are the ones that come down as either true or false. They are able to look at the statement, assess the truth value, and reach a rating. It is the in-between ratings (and “Pants On Fire”, which requires the statement to be both false and malicious) that takes time to explain. We see this across the board in fact-checks against both Republicans and Democrats.

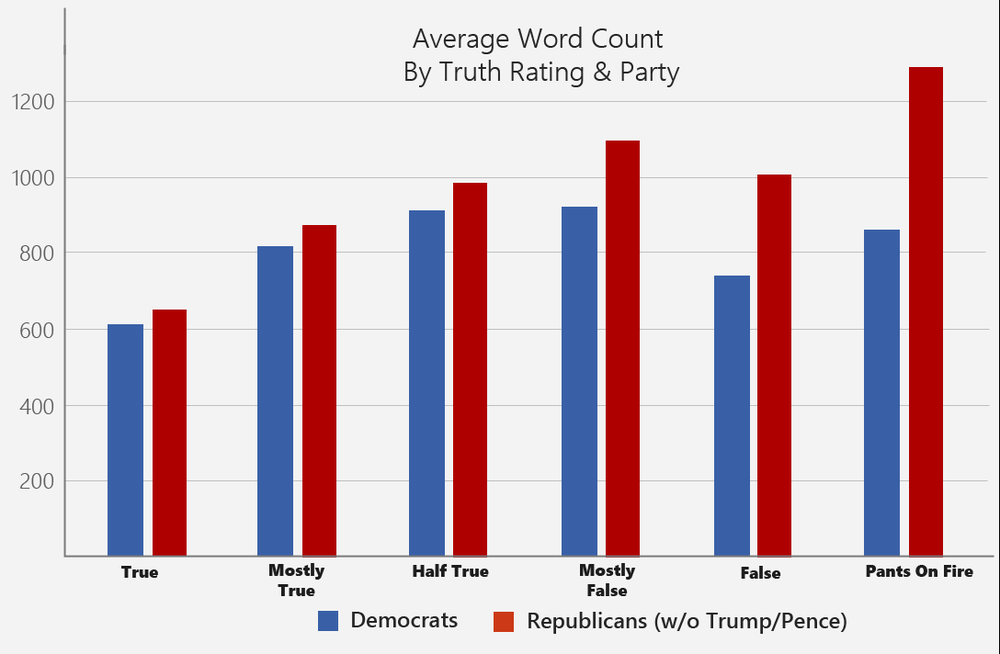

But while the trends are there across all PolitiFact fact-checks, there is an astounding divergence when we look at word counts by party.

As you can see, articles for Republicans are only marginally longer when the rating is True, Mostly True, or Half-True. When we start getting into the false categories, articles looking at GOP statements start getting substantially longer.

Mostly False is PolitiFact’s most frequent rating for Republicans who aren’t named Trump (more on that in the next piece). We’ve found that PolitiFact often rates statements that are largely true but come from a GOP sources as “mostly false” by focusing on sentence alterations, simple mis-statements, fact-checking the wrong fact, and even taking a statement, rewording it, and fact-checking the re-worded statement instead of the original quoted statement.

Doing this takes time and many, many words.

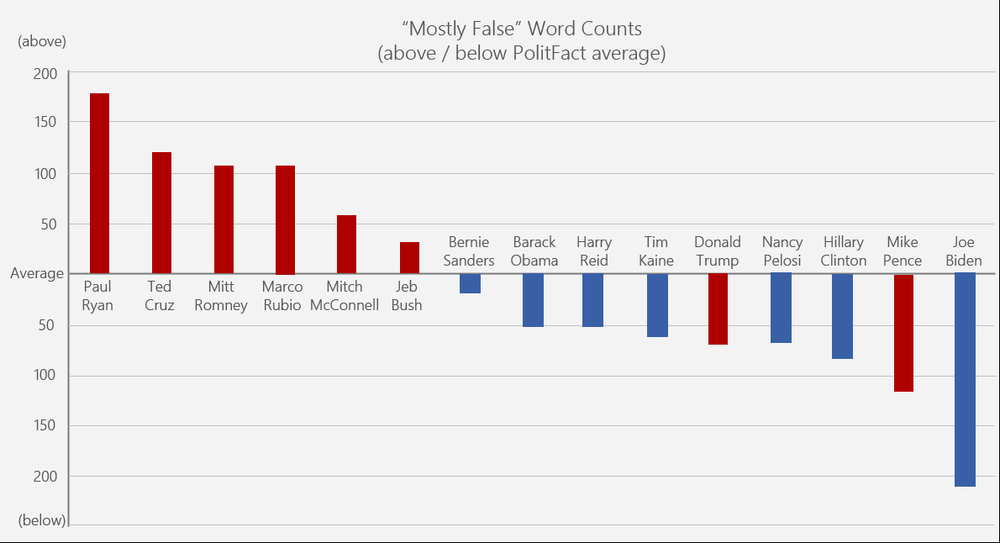

If we look at how many words it takes to evaluate a “mostly false” position, we find that every speaker who has an above-average word count is a Republican.

But we see something else interesting here: Donald Trump and Mike Pence, two of the most frequently fact-checked Republicans in the last year, buck this trend substantially. In fact, they buck this trend across all truth categories.

We thought this was something worth investigating. In the next piece, we’ll look at the curious case of Donald Trump and PolitiFact.

Matt Shapiro is a software engineer, data vis designer, genetics data hobbyist, and technical educator based in Seattle. He tweets under @politicalmath, where he is occasionally right about some things.

What were the most common topics for each party (maybe like top 3 for each) as well as the distributions of said topics? Maybe some analysis of the percentage of true vs false calls on each, too?

Margrave: That’s a harder one to assess & picking the topics would almost certainly be a fairly subjective activity. Which topics are you most interested in?

Using the number of words in each statement is a very interesting means of exploring the data. Since PolitiFact does not give people’s political parties, how did you decide who was a Republican and who was a Democrat and how big was your sample size of the politicians?

here’s your introductory loop. why did you falsely state politifact’s rating?

“S: Here you go. (from link: ‘This debate claim rates Half True.’)

B: But there was a certain way where, if you look at it from a particular angle, that claim isn’t totally true.

S: But the substantive fact was accurate.

B: But there are extenuating facts.

S: When a Democrat said the exact same thing, they called it “Mostly True.” (from link: ‘This claim rates Half True.’)”

Hi Bill, thanks for reading!

You should totally read the PolitiFact article instead of just glancing at the title!

They changed the rating 4 days after *this* was published.

In their words.

“Correction (Dec. 20, 2016): This fact-check initially published on Aug. 24, 2015, and was rated Mostly True. Upon reconsideration, we are changing our ruling to Half True. The text of the fact-check is unchanged.”